LinearRegression--python

本系列所有代码实现均参考https://github.com/lawlite19/MachineLearning_Python

import numpy as np

from matplotlib import pyplot as plt

# 线性回归

# X是矩阵,每一行是一组输入数据;theta是列向量;y也是列向量

def ComputeCost(X, y, theta):

m = len(y)

J = 0

t = np.dot(X, theta)-y

J = np.dot(t.T, t)/(2*m)

return J

# 计算代价函数

# alpaa是学习率;num_iters是迭代次数

def GradientDescent(X, y, theta, alpha, num_iters):

m = len(y)

n = len(theta)

temp = np.matrix(np.zeros((n, num_iters))) # 暂存每次迭代计算的theta,转化为矩阵形式

J_history = np.zeros((num_iters, 1)) # 记录每次迭代计算的代价值

for i in range(num_iters): # 遍历迭代次数

h = np.dot(X, theta) # 计算内积,matrix可以直接乘

temp[:, i] = theta-((alpha/m)*(np.dot(X.T, h-y))) # 梯度的计算

theta = temp[:, i]

J_history[i] = ComputeCost(X, y, theta) # 调用计算代价函数

# print(J_history[i])

return theta, J_history

def featureNormaliza(X):

X_norm = np.array(X) # 将X转化为numpy数组对象,才可以进行矩阵的运算

# 定义所需变量

mu = np.zeros((1, X.shape[1]))

sigma = np.zeros((1, X.shape[1]))

mu = np.mean(X_norm, 0) # 求每一列的平均值(0指定为列,1代表行)

sigma = np.std(X_norm, 0) # 求每一列的标准差

for i in range(X.shape[1]): # 遍历列

X_norm[:, i] = (X_norm[:, i]-mu[i])/sigma[i] # 归一化

return X_norm, mu, sigma

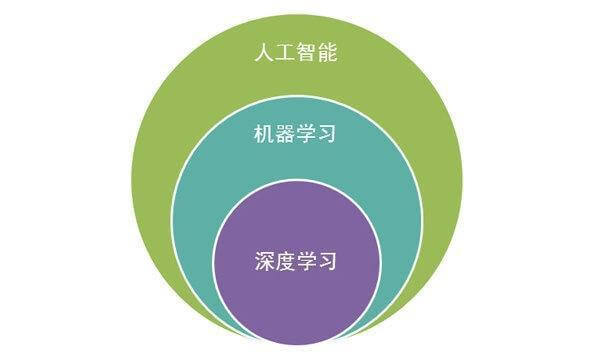

# 逻辑回归

if __name__ == "__main__":

alpha = 0.01

num_iters = 400

# 取消归一化需要的参数

#alpha = 0.0000001

#num_iters = 4000

data = np.loadtxt('data.txt', delimiter=',', dtype=np.float64)

X = data[:, 0:-1]

y = data[:, -1].reshape(-1, 1)

X, mu, s = featureNormaliza(X)

#plt.scatter(X_norm[:, 0], X_norm[:, 1])

# plt.show()

X = np.hstack((np.ones((X.shape[0], 1)), X))

theta = np.zeros((X.shape[1], 1))

theta, J_history = GradientDescent(X, y, theta, alpha, num_iters)

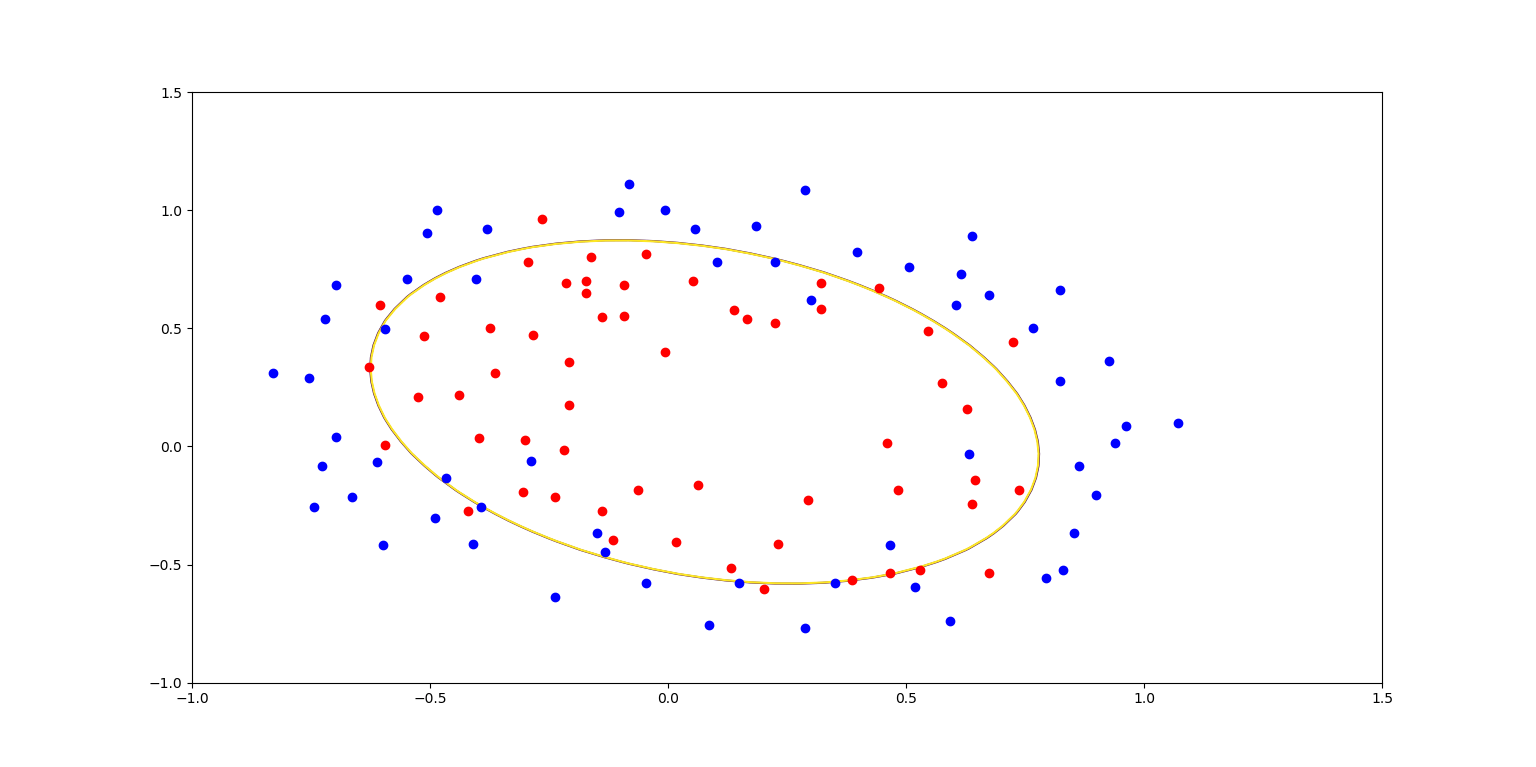

x = np.arange(1, num_iters+1)

plt.plot(x, J_history)

plt.show()

虽然算法理解起来不算很难,等到了自己动手是实现的时候还是有很多问题的。

All articles in this blog are licensed under CC BY-NC-SA 4.0 unless stating additionally.

Comment

GitalkValine